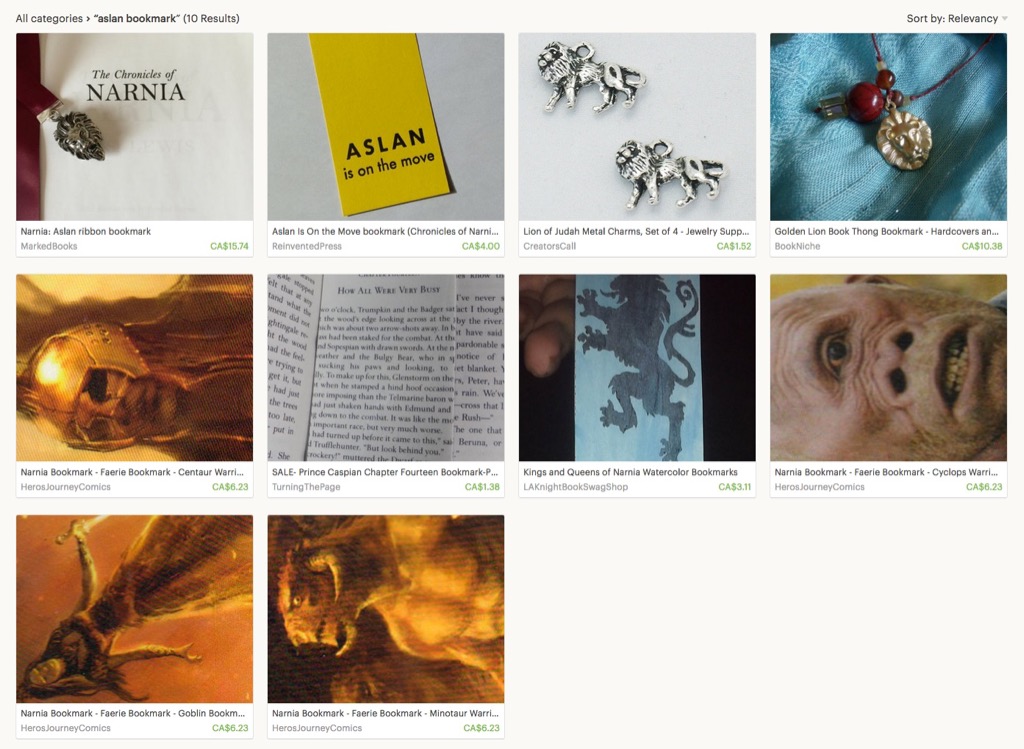

If I don’t say so myself, I think I own the “Aslan bookmark” category on Etsy, with the recent addition of the recently-printed Aslan Is On the Move piece. Get yours today!

I spotted this Robert Harris painting, Study For Composing His Serenade, in a room at Government House in Charlottetown recently; it’s on loan from the Confederation Centre Art Gallery (Oil on canvas, Gift of the Robert Harris Trust, 1965, CAG H-250).

It’s disquieting how much the mandolin player in the painting resembles Ron Palillo’s character Arnold Horshack from the 1970’s television show Welcome Back Kotter:

Regardless of the Horshack-doppelgänger coincidence, it’s a lovely painting.

If, like me, you are somewhat of a Kotter-o-phile, be sure to listen to this final episode of the ill-fated podcast Mystery Show, an episode that, ironically given the photo of Horshack above, is all about lunch boxes.

Robin Sloan writes about deep-learning-assisted-writing, and captures something I’ve been thinking about for a long time:

That’s all buoyed by a strong (recent?) culture of clear explanation. My excited friend claims this has been as crucial to deep learning’s rise as the (more commonly-discussed) availability of fast GPUs and large datasets. Having benefited from that culture myself, it seems to me like a reasonable argument, and an important thing to recognize.

You could argue that the web itself could not have happened without a culture of clear explanation (supplemented greatly by View Source).

It’s why I believe so passionately in the obligation to explain.

My friend Dan the Cartographer came along to the Reinventorium with his children this afternoon for a tour of the letterpress shop. I took the opportunity to set a brief passage from The Lion, the Witch and the Wardrobe, a line from the eighth chapter, where the children encounter the Beavers for the first time (emphasis mine):

“That’s right,” said the Beaver. “Poor fellow, he got wind of the arrest before it actually happened and handed this over to me. He said that if anything happened to him I must meet you here and take you on to—” Here the Beaver’s voice sank into silence and it gave one or two very mysterious nods. Then signalling to the children to stand as close around it as they possibly could, so that their faces were actually tickled by its whiskers, it added in a low whisper—

“They say Aslan is on the move—perhaps has already landed.”

And now a very curious thing happened. None of the children knew who Aslan was any more than you do; but the moment the Beaver had spoken these words everyone felt quite different. Perhaps it has sometimes happened to you in a dream that someone says something which you don’t understand but in the dream it feels as if it had some enormous meaning—either a terrifying one which turns the whole dream into a nightmare or else a lovely meaning too lovely to put into words, which makes the dream so beautiful that you remember it all your life and are always wishing you could get into that dream again. It was like that now. At the name of Aslan each one of the children felt something jump in his inside. Edmund felt a sensation of mysterious horror. Peter felt suddenly brave and adventurous. Susan felt as if some delicious smell or some delightful strain of music had just floated by her. And Lucy got the feeling you have when you wake up in the morning and realise that it is the beginning of the holidays or the beginning of summer.

I set ASLAN in 30 pt. Futura Bold, and “is on the move” in 24 pt. Futura; the paper is yellow card I purchased while I was in Japan.

I walked Dan’s family through the process of setting the type, setting up the Golding Jobber № 8, doing makeready, and printing. A good time, I think, was had by all.

Here’s what the Charlottetown Farmers’ Market looks like when you’re here early (which we almost never are).

Ironically, this earliness followed on from an early morning email exchange with my friend Dave about early rising (which we almost never do).

Fast coffee line! No claustrophobia! Abundant tempeh! What’s not to love?

I found today that it’s possible to turn a Raspberry Pi 3 into a beacon that can be discovered, via Bluetooth LE, by mobile devices nearby.

I took the Pi I use as an OSMC media server here in the office, SSHed to the command line, and then:

sudo connmanctl enable bluetooth

sudo hciconfig hci0 up

sudo hciconfig hci0 leadv 3

sudo hcitool -i hci0 cmd 0x08 0x0008 1f 02 01 06 03 03 aa fe 17 16 aa fe 10 00 02 72 65 69 6e 76 65 6e 74 6f 72 69 75 6d 07 3f 62 6e

The final line is an encoded representation of “show the URL for the Reinventorium” that I calculated with this handy tool.

With this in place, the Google Nearby tool automatically discovers the new beacon, and provides me with a link to the URL:

![]()

The only snag I ran into is that the Reinventorium page that I created for the link originally had bad metadata, which I needed to update on the site. There didn’t appear to be a way other than by making slight adjustments to the target URL in the beacon, to cause the Google Nearby app to refresh this metadata.

Thank you to my friend Jonas for originally turning me on to this emerging technology.

The CBC is holding a public summit on the “changing nature of news” on March 24 at the Confederation Centre of the Arts, hosted by Peter Mansbridge and including some of the country’s preëminent journalists.

It was announced yesterday that free tickets would be available starting at noon today at the Confederation Centre box office, and online starting on Monday.

I dropped by to pick some up this afternoon at 12:30 and found a bunch of disappointed people waiting in line; they’d just learned that the event was sold out after only 30 minutes. As one particularly disturbed fellow said on his way out, “this riles me up to no end.”

While I’m sad we won’t be able to attend, I do take some comfort from the notion that an event on the “changing nature of news” sold out as fast as the Leonard Cohen concert a few years go.

In case they’ve escaped your attention, Port Cities is a new Nova Scotia supergroup that deserves your attention. They are releasing a new album here in Charlottetown on March 24, 2017. Unfortunately, this date conflicts with a performance across the street at the same time by Peter Mansbridge’s supergroup. An embarrassment of riches in the neighbourhood on that night.

Now that my phone has received the Google Assistant update, I’ve got both it and our Amazon Echo hooked up to the Wemo switches for our living room light and television.

So here’s a little video showing them both at work on the same task:

I am

I am