Google Lens successfully identified Ethan as a Standard Poodle.

At the withers was new to me; here’s what it means:

The highest part of a horse’s back, lying at the base of the neck above the shoulders. The height of a horse is measured to the withers.

A project took me to the top of the Holman Grand this morning, and I took the opportunity to take some photos from different vantage points.

By the time I got home, Google Photos had, automatically, and without prompting, stitched then together into a panorama.

Every Saturday morning at the Charlottetown Farmers’ Market, after we have our smoked salmon bagel and coffee (or smoothie), we swing around to Lady Baker’s Tea, where we order up whatever the iced tea of the day is.

Often we’ll take an extra moment and have a chat with personable owner Katherine Burnett or one of her crack team, and that’s exactly what we did last Saturday: I asked Katherine where the best place downtown to get a wide selection of her retail tea was, and she invited me to come to their new location in the basement of the Kirk of St. James.

As you might imagine, given my recent relocation to a protestant church basement myself, hilarity ensued, and plans for each of us to make a pilgrimage to the other’s operation were hatched.

All of which led me to decide that what we really need to do next is to conjure up an mutual aid society for we running businesses from basements, and this begat the launching, via printing press, of the Underground Business Society.

Once the cards are dry, I’ll launch the formal membership drive. Besides me and Lady Baker’s, I can think of Lightning Bolt Comics, and the tartan annex of Northern Watters Knitwear.

Can you suggest other basement-location Charlottetown businesses?

Ton wants to count the number of blog posts he writes per week. I responded with a shell script, but without any documentation.

Here’s the missing manual.

First, the entire script:

curl -s https://www.zylstra.org/blog/feed/ | \

grep pubDate | \

sed -e 's/<pubDate>//g' | \

sed -e 's/<\/pubDate>//g' | \

while read -r line ; do

date -j -f "%a, %d %b %Y %T %z" "$line" "+%V"

done | \

sort -n | \

uniq -c

The basic idea here is “get the RSS feed of Ton’s blog, pull out the publication dates of each post, convert each date to a week number, and then total posts by week number.” As of this writing, the output of the script is:

5 23

6 24

4 25

Which tells me, for example, that this week, which is week 25 of 2018, Ton has written 4 posts so far.

Here’s a line by line breakdown of how the script works.

curl -s https://www.zylstra.org/blog/feed/ | \

Use the curl command, in silent mode (-s) so as to not print a lot of unhelpful progress information, to get Ton’s RSS feed. This returns a chunk of XML, with one

<item>

<title></title>

<link>https://www.zylstra.org/blog/2018/06/4125/</link>

<comments>https://www.zylstra.org/blog/2018/06/4125/#comments</comments>

<pubDate>Wed, 06 Jun 2018 11:23:08 +0000</pubDate>

<dc:creator><![CDATA[Ton Zijlstra]]></dc:creator>

<category><![CDATA[microblog]]></category>

<category><![CDATA[blogroll]]></category>

<category><![CDATA[opml]]></category>

<guid isPermaLink="false">https://www.zylstra.org/blog/?p=4125</guid>

<description><![CDATA[Frank Meeuwsen describes 5 blogs he currently enjoys following, and in an aside mentions he now follows 250+ blogs. Quick question for him: do you publish your list of feeds somewhere? My list of about a 100 feeds is on the right hand side.]]></description>

<content:encoded><![CDATA[<p>Frank Meeuwsen <a href="http://diggingthedigital.com/5-favoriete-blogs/">describes 5 blogs he currently enjoys following</a>, and in an aside mentions he now follows 250+ blogs. Quick question for him: do you publish your list of feeds somewhere? My <a href="https://zylstra.org/tonsrss_june2018.opml">list of about a 100 feeds</a> is on the right hand side.</p>

]]></content:encoded>

<wfw:commentRss>https://www.zylstra.org/blog/2018/06/4125/feed/</wfw:commentRss>

<slash:comments>2</slash:comments>

<post-id xmlns="com-wordpress:feed-additions:1">4125</post-id>

</item>

Notice that each post has a pubDate element that identifies the date it was published; that’s the date I need to focus on.

The vertical bar (|) at the end of this first line says “take the output of this command, and provide it as the input for the next command” and the backslash (\) says “this script continues on the next line; we’re not over yet.”

grep pubDate | \

This extracts only the lines that have pubDate in them, resulting in:

<pubDate>Wed, 20 Jun 2018 09:04:14 +0000</pubDate>

<pubDate>Tue, 19 Jun 2018 19:41:41 +0000</pubDate>

<pubDate>Tue, 19 Jun 2018 18:06:01 +0000</pubDate>

<pubDate>Tue, 19 Jun 2018 10:19:59 +0000</pubDate>

<pubDate>Sun, 17 Jun 2018 18:31:41 +0000</pubDate>

<pubDate>Sat, 16 Jun 2018 13:02:18 +0000</pubDate>

<pubDate>Sat, 16 Jun 2018 08:38:42 +0000</pubDate>

<pubDate>Fri, 15 Jun 2018 13:26:06 +0000</pubDate>

<pubDate>Fri, 15 Jun 2018 13:05:11 +0000</pubDate>

<pubDate>Mon, 11 Jun 2018 06:49:12 +0000</pubDate>

<pubDate>Sun, 10 Jun 2018 19:29:24 +0000</pubDate>

<pubDate>Sun, 10 Jun 2018 19:02:20 +0000</pubDate>

<pubDate>Fri, 08 Jun 2018 10:49:14 +0000</pubDate>

<pubDate>Thu, 07 Jun 2018 19:11:10 +0000</pubDate>

<pubDate>Wed, 06 Jun 2018 11:23:08 +0000</pubDate>

Now I just have the dates: this is coming along just fine. Next, I need to remove the

sed -e 's/<pubDate>//g' | \

sed -e 's/<\/pubDate>//g' | \

This uses sed, which you can thing of as an “automated text editor,” to search and replace for those two elements and replace them with nothing; the result is:

Wed, 20 Jun 2018 09:04:14 +0000

Tue, 19 Jun 2018 19:41:41 +0000

Tue, 19 Jun 2018 18:06:01 +0000

Tue, 19 Jun 2018 10:19:59 +0000

Sun, 17 Jun 2018 18:31:41 +0000

Sat, 16 Jun 2018 13:02:18 +0000

Sat, 16 Jun 2018 08:38:42 +0000

Fri, 15 Jun 2018 13:26:06 +0000

Fri, 15 Jun 2018 13:05:11 +0000

Mon, 11 Jun 2018 06:49:12 +0000

Sun, 10 Jun 2018 19:29:24 +0000

Sun, 10 Jun 2018 19:02:20 +0000

Fri, 08 Jun 2018 10:49:14 +0000

Thu, 07 Jun 2018 19:11:10 +0000

Wed, 06 Jun 2018 11:23:08 +0000

Next, I need to convert those dates into weeks. Fortunately the date command has an easy way of doing this. Using a while…do…done loop, I convert each line’s date into a week number:

while read -r line ; do

date -j -f "%a, %d %b %Y %T %z" "$line" "+%V"

done

The key here is that the format string, which follows -f, needs to match the format of the dates I’m converting: each of the placeholders in that string stand for a different part of the date. For example, %a is the abbreviated three-letter name of the day. The +%V is the format string of the output and it represents the week number of the year of the date. The result is this:

25

25

25

25

24

24

24

24

24

24

23

23

23

23

23

That’s the week number of each of the blog posts, in reverse chronological order. I want the weeks to be in chronological order, so I sort them numerically with:

sort -n | \

To result in:

23

23

23

23

23

24

24

24

24

24

24

25

25

25

25

And, finally, I used a superpower of the uniq command, the -c option, which, says the man page, will “Precede each output line with the count of the number of times the line occurred in the input, followed by a single space.” That’s exactly what I want:

uniq -c

Put all that together and you get:

5 23

6 24

4 25

Each week number, preceded by the number of blog posts written that week.

Shell scripting is something I’m so happy to have burned into my muscle memory, as I use it every day to accomplish similar feats. The underlying principle of:

Make each program do one thing well. To do a new job, build afresh rather than complicate old programs by adding new “features”.

is one that’s served me well, and dovetails nicely with how I approach the world.

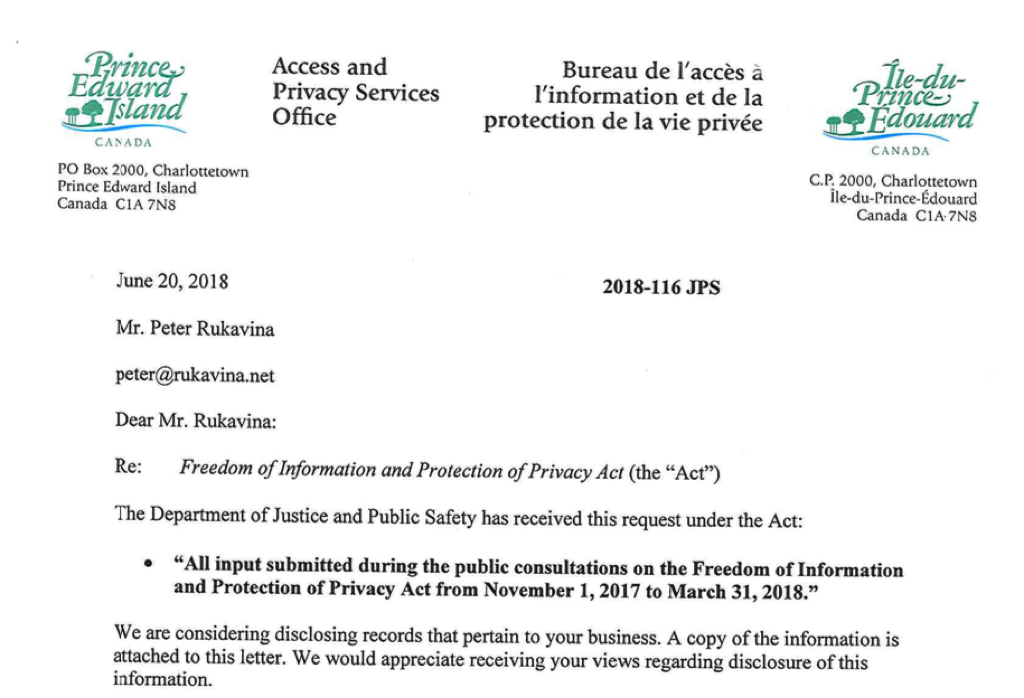

In February I made a written submission to government on its Modernize the Freedom of Information and Protection of Privacy Act paper.

Today I received a letter requesting my views on releasing my submission, itself now a subject of a Freedom of Information request:

The Department of Justice and Public Safety has received this request under the Act:

- “All input submitted during the public consultations on the Freedom of Information and Protection of Privacy Act from November 2, 2017 to March 31, 2018”

We are considering disclosing records that pertain to your business. A copy of the information is attached to this letter. We would appreciate your views regarding disclosure of this information.

My reply was simply:

I have no objection to my submission being released.

It is already public and online at:

https://ruk.ca/content/modernize-freedom-information-and-protection-privacy-act

I assume that my reply is now itself a record subject to future FOIPP requests. So the tail can again eat itself in another round.

There is a gathering of people and their doodles this Saturday, June 23, 2018, at the PEI Humane Society’s dog park. It’s both a fundraiser for the Humane Society and a social gathering for people and dogs.

For the uninitiated, doodles are crossbred poodles. Labradoodles. Goldendoodles. And so forth.

As we’ve been asked, of Ethan (not a doodle), “oh, is he a doodle?” roughly a billion times since he came into our lives, we have some familiarity with the dogs and their obsessive passionate humans.

While simply a standard poodle, Ethan has some apricot in his ears, and that prompts doodle-afficianados to flights of fancy. Fortunately, Ethan’s role in Oliver’s life is, in part, to be an entrée to conversation, so this is not a bad thing.

I know about the Romp because I phoned the Humane Society last week to inquire whether their dog park, long closed for renovations, was due to open any time soon. They told me that all that was holding things up was some fencing, and that the fence supplier had been given the Doodle Romp as an immovable deadline.

I received assurances that, should we bring Ethan (not a doodle) to the Doodle Romp, we would not be turned away.

Were Oliver not so averse to committing acts of fraud, I’d try to pull off a “yes he is!”, but we’ll have to play it straight.

This video introduction to LoRaWAN, in addition to being a great introduction to LoRaWAN, is also a great introduction to the ins and outs of sending data by radio. The explanation of the “link budget” was particularly helpful.

While we’re on the topic of (delightful | creepy) computer-assisted lifestyle logistics, I’ve just taken advantage of a feature that I’m pretty sure has been part of the Mac Mail application for a long time, it’s ability to automatically parse out appointments from arbitrary text and allow me to add them to my calendar:

This was almost nothing but helpful.

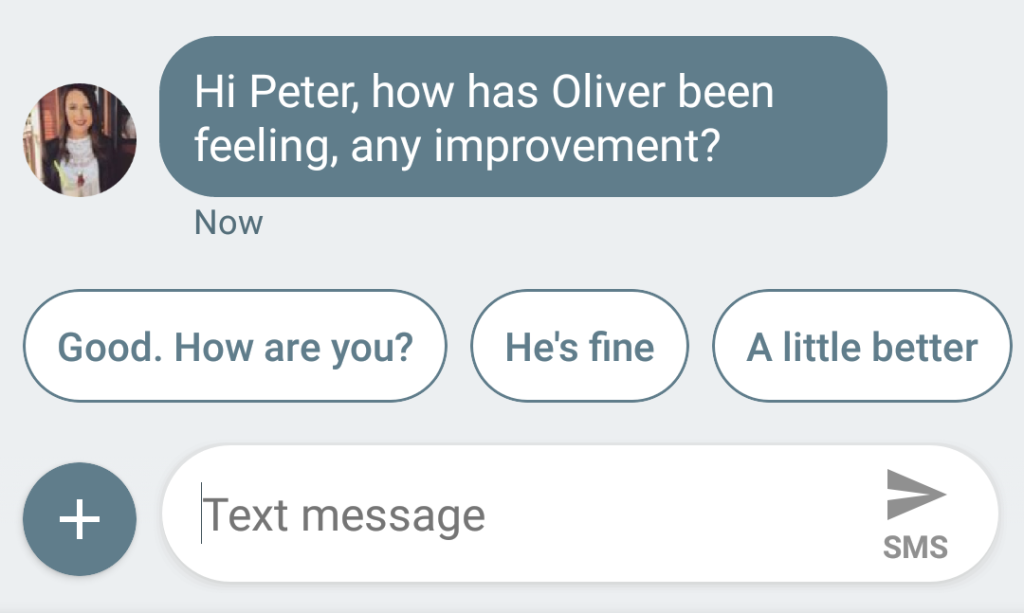

Google is rolling out a lot of updates to its text messaging suite this month; most recent is the appearance of context-sensitive suggested replies.

In this case it did a good job: its suggested replies were very close to what I actually replied, manually.

I am

I am