The CBC reports that a Nova Scotia teenager has been charged with programmatically accessing public information from a public Government of Nova Scotia website. This is an issue near and dear to my heart, as the accused was charged for engaging in exactly the sort of thing that I do regularly.

For example, earlier this year I was contacted by a Prince Edward Island journalist who was researching the latest plan by the Government of Prince Edward Island to equip the Island with a high-speed Internet backbone. They’d been looking for the tender documents associated with earlier attempts to solve the same problem, but had been stymied by the fact that these older tender documents were seemingly no longer available online, and their access to information requests had remained unfilled; they wanted to know if I could help.

The dropping-out-of-view of the older tenders appears to have been a side-effect of the Province’s migration to a new website: the legacy tender website contained records of tenders extending back to 2002; when it was replaced with a new section on the new website, older tenders were not included in the migration of the search feature, and so the tender they were looking for was no longer online. The confusing thing for the journalist was that, until very recently, they’d been able to use Google to locate older tender documents, and they were showing in Google search results; this was no longer the case, though, and they were wondering whether the documents were still online in some form.

I agreed to assist them in their search, as I’ve a personal interest both in why we haven’t collectively been able to solve the Internet access issue on PEI despite trying for 25 years, and in open data and transparency and access to information.

What I discovered is that the older tender documents are, in fact, still online, on the legacy government website; they’re just not exposed to the new site’s search.

If you take the URL:

http://www.gov.pe.ca/tenders/gettender.php3?number=$tender

and replace $tender with a number from 1 to 5884 (a limit I found simply by experimenting), you can retrieve information for tenders back to this June 6, 2002 tender for a heavy-duty tire changer.

One of the things computers are very good at is iterating. Which is to say, doing the same thing over and over and over again in a loop.

So, with what I learned about how the legacy tenders are stored, publicly available, I wrote a short computer program to grab all of them:

for ($tender = 1 ; $tender <= 5884 ; $tender++) {

system("wget \"http://www.gov.pe.ca/tenders/gettender.php3?number=$tender\" -O html/$tender.html");

}

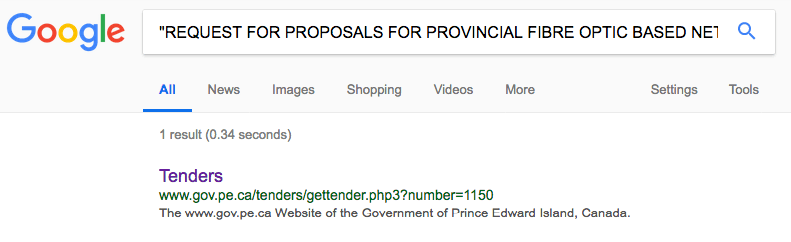

This left me with a collection of HTML documents, one for each tender. And this included the 2002 RFP FOR CONSULTING SERVICES TELECOMMUNICATIONS NETWORK INFRASTRUCTURE, the 2006 REQUEST FOR PROPOSALS FOR PROVINCIAL FIBRE OPTIC BASED NETWORK, and the 2007 REQUEST FOR PROPOSALS FOR OPTO-ELECTRONICS AND RELATED SERVICES. None of these tender documents are accessible through the updated search tool, but they are all still publicly available online, and they references to them continue to appear in Google search results:

In the end, then, I was able to supply the journalist with the materials they were looking for, using publicly available copies on the Province’s publicly available webserver. That I happened to access these materials by iterating (“show me tender #1, show me tender #2, show me tender #3, etc.”), with a computer doing the heavy lifting, is immaterial.

I’ve used similar techniques to retrieve information dozens of times over the years. It’s how I was able to provide an RSS feed of Charlottetown Building Permits (which only recently went dark when the City updated its website and made this impossible). It’s how I can continue to provide a visualization of PEI’s electricity load and generation. And it’s how I was able to create an alternative, more flexible corporations search a decade ago when I was otherwise unable to find information about Richard Homburg.

The freedom to retrieve materials that are online at a public URL, whether in a browser, via a script, or through other automated process, is one of the fundamental freedoms of the Internet, and it’s one of the things that distinguish the Internet from all networks that came before it.

The story of the accused teenager in Nova Scotia bears striking similarity to my search for tenders and my search for corporations information; from the CBC story (emphasis mine):

Around the same time, his Grade 3 class adopted an animal at a shelter, receiving an electronic adoption certificate.

That led to a discovery on the classroom computer.

“The website had a number at the end, and I was able to change the last digit of the number to a different number and was able to see a certificate for someone else’s animal that they adopted,” he said. “I thought that was interesting.”

The teenager’s current troubles arose because he used the same trick on Nova Scotia’s freedom-of-information portal, downloading about 7,000 freedom-of-information requests.

He says his interest stemmed from the government’s recent labour troubles with teachers.

“I wanted more transparency on the teachers’ dispute,” he said.

After a few searches for teacher-related releases on the provincial freedom-of-information portal, he didn’t find what he was looking for.

“A lot of them were just simple questions that people were asking. Like some were information about Syrian refugees. Others were about student grades and stuff like that,” he said.

The teen said a single line of code was all it took to get the information. (CBC)

So instead, he decided to download all the files to search later.

“I decided these are all transparency documents that the government is displaying. I decided to download all of them just to save,” he said.

He says it took a single line of code and a few hours of computer time to copy 7,000 freedom-of-information requests.

“I didn’t do anything to try to hide myself. I didn’t think any of this would be wrong if it’s all public information. Since it was public, I thought it was free to just download, to save,” he said.

The sad irony of this tale is that it was freedom of information documents that the accused downloaded, and in among those files were ones that had not been properly redacted. That is certainly a lapse in security on the part of the government that bears investigation, but the accused bears no responsibility for downloading publicly-available files, whether mistakenly made public or not.

As you can imagine, I feel a great sense of solidarity with the accused, and I’ve reached out to their lawyer with an offer to help in any way I can. Beyond the immediate concern for them, however, I’ve a broader concern about the chilling effect of this heavy-handed action against the freedom to iterate the Nova Scotia government has launched.

They are threatening one of the pillars of a free and open Internet; we need to stand up for that.

A GoFundMe page has been established to support the accused.

So far today I have:

- Taken Ethan to be groomed (they shaved great gobs of hair from him; he looks like a naked mole-rat now).

- Inspired by Ethan, gone to get my own hair cut at Ray’s Place. I do not look like a mole-rat. At least not completely.

- Donated plasma at Canadian Blood Services.

- Ordered a new pair of Brax trousers from Dow’s, something that’s been on my to-do list for ages.

I’m always asking questions of the friendly and helpful nurses at Canadian Blood Services, and here’s what I learned today:

- My hematocrit reading was 42%, which means that 42% of my blood is red blood cells; this means, give-or-take, that 58% of my blood volume is plasma for the taking.

- Each plasma donation involves extracting 500 ml out of me.

- The average person has 5 litres of blood (I learned this from Wikipedia)

If my math is right, that means that roughly, 2900 ml of my blood (58% of 5 litres) is plasma, and that I donated only 17% of that to the cause, leaving me with 2400 ml still sloshing around inside me to do whatever it is that plasma does.

It’s only 3:35 p.m.–I have high hopes for the remainder of the day.

I’ve been on a skateboard only once in my life. My friend Timmy, who lived up on the 13th Concession, made his own skateboard out of a piece of wood and some casters, and he let me try it out one afternoon in his basement. I fell down. I did not get back up again.

Other than an aborted attempt to windsurf, on a lake near Parham, Ontario, in 1988, I have been absent from the balancing-on-a-board sports scene ever since.

The arrival of electric skateboards on the scene recently, most prominently under the feet of prolific YouTuber Casey Neistat and his Boosted board, has caused me to reconsider for the first time. They look like so much fun!

I was disabused of this notion, however, after reading some helpful Reddit threads, which have me convinced that I would almost immediately and irreparably injure myself if I were to try.

So I will remain with feet on ground.

My friend Ton’s WordPress blog supports Pingbacks, which the WordPress documentation describes like this:

A pingback is a special type of comment that’s created when you link to another blog post, as long as the other blog is set to accept pingbacks. To create a pingback, just link to another WordPress blog post. If that post has pingbacks enabled, the blog owner will see a pingback appear in their comments section

So, for example, if I want to indicate to Ton that this post is in reference to his post from earlier today, Reinventing Distributed Conversations, his post contains guidance for my blogging engine as to where to send that notification:

<link rel="pingback" href="https://www.zylstra.org/wp/xmlrpc.php" />

(I found that by using View > Source from my browser menu when reading Ton’s post).

Unfortunately contemporary Drupal (the blogging software I’m using to write this) has no built-in support for Pingbacks. There have been add-on modules in the past, but none have been updated recently, and none are covered by Drupal’s security advisory policy:

- Vinculum (not updated for 3 years)

- Pingback (never released for Drupal 7)

- Trackback (never released for Drupal 7)

I suspect this is because as Drupal transitioned from Drupal 6 to Drupal 7, the commercial web had taken over, the social web was withering on the vine, and there was little or no motivation to develop modules for a standard that few were using.

In the interim there are workarounds: this script allows me, in theory, to notify Ton’s blog manually, from the command line, with a Pingback, like this:

./pingback.sh \

https://ruk.ca/content/resuscitating-pingbacks \

https://www.zylstra.org/blog/2018/04/reinventing-distributed-conversations/

I tried that out just now, but it didn’t work: there’s too much in the script that’s not supported by macOS.

So, using guidance here, I tried doing this very manually, created an XML payload:

<?xml version="1.0" encoding="iso-8859-1"?>

<methodCall>

<methodName>pingback.ping</methodName>

<params>

<param>

<value>

<string>https://ruk.ca/content/resuscitating-pingbacks</string>

</value>

</param>

<param>

<value>

<string>https://www.zylstra.org/blog/2018/04/reinventing-distributed-conversations/</string>

</value>

</param>

</params>

</methodCall>

and then used cURL to POST that to Ton’s Pingback endpoint:

curl -X POST -d @pingback.xml https://www.zylstra.org/wp/xmlrpc.php

But the response I got back suggests that there’s something up on Ton’s end:

<!DOCTYPE HTML PUBLIC "-//IETF//DTD HTML 2.0//EN">

<html><head>

<title>403 Forbidden</title>

</head><body>

<h1>Forbidden</h1>

<p>You don't have permission to access /wp/xmlrpc.php

on this server.</p>

</body></html>

I suspect that might be due to Ton or his surrogates closing off access to Pingback because of a vulnerability.

So no Pingbacks for now, both because my Drupal won’t send them, and Ton’s WordPress won’t receive them.

However, thanks to the diligent work of the IndieWeb tribe, there’s a more modern standard, called Webmention, that might allow us to get to the same place: there’s a plugin for WordPress for Ton’s end, but only support via the atrophied Vinculum module for Drupal; I’ll dig into that and see if there’s a way forward.

In the meantime, I’ll just manually leave a comment on Ton’s blog pointing here.

Update: I reinstalled Vinculum and have it set up to both send and receive Webmentions. As a result, if you View > Source on this page, you’ll find:

<link rel="webmention" href="https://ruk.ca/node/22274/webmention" />

This means that this page should be able to receive Webmentions. But it seems to be only partially working. Anyone care to send?

My friend Ton has been blogging up a storm on the blogging-about-blogging front of late:

- From Semi Freddo to Full Cold Turkey with FB

- Algorithms That Work For Me, Not Commodotise Me

- Revisiting the Personal Presence Portal

- Adding a Wiki-like Section

He is, as such, a man after my own heart.

And he’s inspired me to recall the long-dormant feeling of how it felt to be building the Internet together, back in the days before we outsourced this to commercial interests as a way of saving money, learning curve and messiness.

The difference now, a year after I decamped from the commercial web, is that when I did that I was focused on a combination of “the commercial web is evil” and a profound sense of personal failure at having been part of letting that happen, whereas now I’m filled with tremendous hope.

It’s not a coincidence that I work under a banner called Reinvented: time and time and time again over the course of my life I’ve found utility in returning to earlier iterations of technology.

Letterpress printing has taught me so much about the true nature of letterforms, in a way that the digital never could.

Keeping a ready supply of cards, envelopes and stamps in the office has kindled a practice of regularly thanking people for kindnesses, advocacies, and boldnesses.

Teaching myself to sketch has changed the way I look at architecture.

What Ton’s writing–and the zeitgeist that surrounds it–have made me remember is that we invented blogging already and then, not completely but almost, we threw it away.

But all the ideas and tools and debates and challenges we hashed out 20 years ago on this front are as relevant today as they were then; indeed they are more vital now that we’ve seen what the alternatives are. And, fortunately, often we’ve recorded much of what we learned in our blogs themselves.

So I propose we move on from “Facecbook is evil” to “blogging is awesome–how can we continue to evolve it.” And, in doing so, start to put the inter back into the Internet.

Nine years ago Oliver presented a delightful video tour of our Yotel room at Heathrow Airport; it may be the best review of anything, ever (of course I am somewhat biased in this regard).

In this spirit, albeit in a non-narrated and decidedly less delightful manner, I recorded a tour of my room at the Yotel in Boston, where I stayed last week en route back to PEI.

Last year I posted some instructions–mostly for my own use–for transcoding video I’d recorded and uploading the results to Amazon S3 so that I could embed video in an HTML5 video element here on the blog.

While that proved useful, and a commercial-free alternative to posting to YouTube, the friction involved in the multi-step process was enough to dissuade me from posting video as often as I would like.

So today I set out to streamline this through automation, and I’m documenting the process here, both so that I don’t forget how I did it and as a guide to others.

The starting point is a video recorded on my Nextbit Robin phone: videos I record with the phone are automatically synced to Google Photos where I can download them as MP4 files.

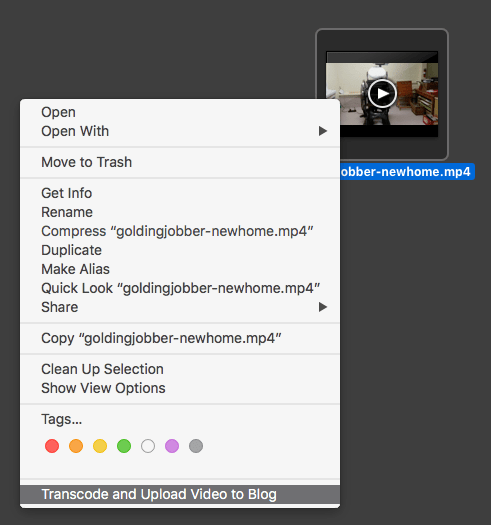

So I begin, say, with a file called goldingjobber-newhome.mp4 on my Mac desktop:

![]()

To have a version of that video that will play across different browsers requires that I transcode (aka “convert”) the video to Webm format. I experimented with doing this locally on my Mac using ffmpeg, but this took a really long time, more than my day-to-day patience would support.

Fortunately, Amazon Web Services has a very-low-cost web service for doing exactly this called Amazon Elastic Transcoder, and it works relatively quickly by compare.

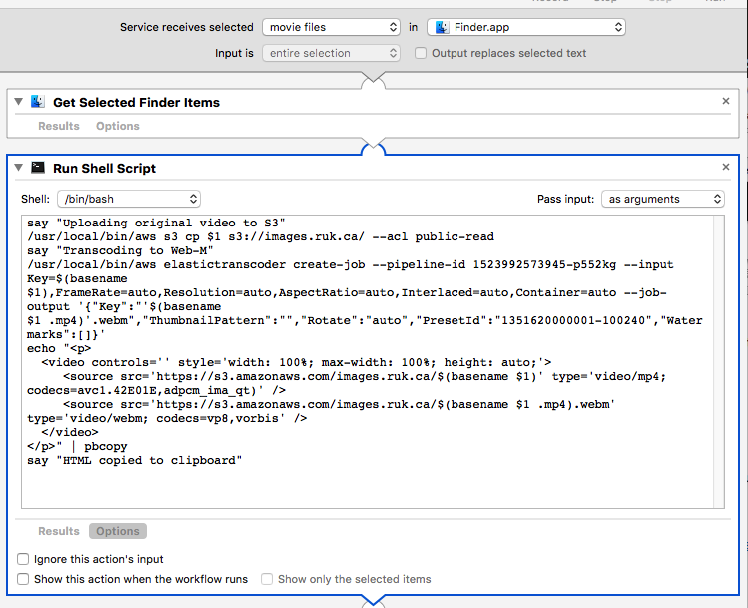

So I wrote a Mac Automator script to do the following:

- Upload the MP4 file on my Desktop to Amazon S3.

- Transcode the video to Webm.

- Put a chunk of HTML on my Mac clipboard that I can paste into the source of a blog post.

The Automator script looks like this:

say "Uploading original video to S3"

/usr/local/bin/aws s3 cp $1 s3://images.ruk.ca/ --acl public-read

say "Transcoding to Web-M"

/usr/local/bin/aws elastictranscoder create-job --pipeline-id 1523992573945-p552kg --input Key=$(basename $1),FrameRate=auto,Resolution=auto,AspectRatio=auto,Interlaced=auto,Container=auto --job-output '{"Key":"'$(basename $1 .mp4)'.webm","ThumbnailPattern":"","Rotate":"auto","PresetId":"1351620000001-100240","Watermarks":[]}'

echo "<p>

<video controls='' style='width: 100%; max-width: 100%; height: auto;'>

<source src='https://s3.amazonaws.com/images.ruk.ca/$(basename $1)' type='video/mp4; codecs=avc1.42E01E,adpcm_ima_qt)' />

<source src='https://s3.amazonaws.com/images.ruk.ca/$(basename $1 .mp4).webm' type='video/webm; codecs=vp8,vorbis' />

</video>

</p>" | pbcopy

say "HTML copied to clipboard"

Going through this line by line, here’s what’s happening:

/usr/local/bin/aws s3 cp $1 s3://images.ruk.ca/ --acl public-read

This takes the file I’ve highlighted on the Desktop and sent to the Automator script ($1) and uses the Amazon Web Services command line tool aws to upload it to Amazon S3 as a publicly-readable file. When this is complete, I end up with a file at https://s3.amazonaws.com/images.ruk.ca/goldingjobber-newhome.mp4 that I can then use as the starting point for transcoding.

/usr/local/bin/aws elastictranscoder create-job --pipeline-id 1523992573945-p552kg --input Key=$(basename $1),FrameRate=auto,Resolution=auto,AspectRatio=auto,Interlaced=auto,Container=auto --job-output '{"Key":"'$(basename $1 .mp4)'.webm","ThumbnailPattern":"","Rotate":"auto","PresetId":"1351620000001-100240","Watermarks":[]}'

This is where the heavy-lifting happens: I use the aws tool to create what Elastic Transcoder calls a “job,” passing the name of the file I uploaded to S3 as the input:

--input Key=$(basename $1)

And setting the output filename as that same name, minus the .mp4 extension, with a .webm extension:

--job-output '{"Key":"'$(basename $1 .mp4)'.webm"...

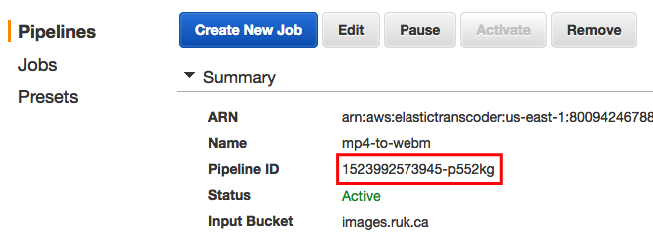

The pipeline-id parameter is the ID of an Elastic Transcoder pipeline that defined the S3 bucket to look for the input file in, the bucket to save the output file in, and with what permissions:

The PresetId value is the ID of an Elastic Transcoder preset for transcoding into Webm:

The end result of this process is that, moments later, I end up with a transcoded Webm version of the video at https://s3.amazonaws.com/images.ruk.ca/goldingjobber-newhome.webm.

echo "<p>

<video controls='' style='width: 100%; max-width: 100%; height: auto;'>

<source src='https://s3.amazonaws.com/images.ruk.ca/$(basename $1)' type='video/mp4; codecs=avc1.42E01E,adpcm_ima_qt)' />

<source src='https://s3.amazonaws.com/images.ruk.ca/$(basename $1 .mp4).webm' type='video/webm; codecs=vp8,vorbis' />

</video>

</p>" | pbcopy

Finally, I use the Mac pbcopy tool to copy some pre-configured HTML onto the Mac clipboard, ready for pasting into the source of a blog post.

In Automator, this all ends up looking like this:

While this seems all quite complex under the hood, actually using the script is dead-simple: I simply right-click on the video file on my Mac Desktop and select “Transcode and Upload Video to Blog” from the contextual menu:

The result is that all of the above happens, and I end up with this HTML on my Mac clipboard:

<p>

<video controls='' style='width: 100%; max-width: 100%; height: auto;'>

<source src='https://s3.amazonaws.com/images.ruk.ca/goldingjobber-newhome.mp4' type='video/mp4; codecs=avc1.42E01E,adpcm_ima_qt)' />

<source src='https://s3.amazonaws.com/images.ruk.ca/goldingjobber-newhome.webm' type='video/webm; codecs=vp8,vorbis' />

</video>

</p>

When I embed that HTML into a blog post, the result looks like this:

I made this spontaneous declaration to a friend this afternoon

Johnstons River is the new Brooklyn.

Mark my words.

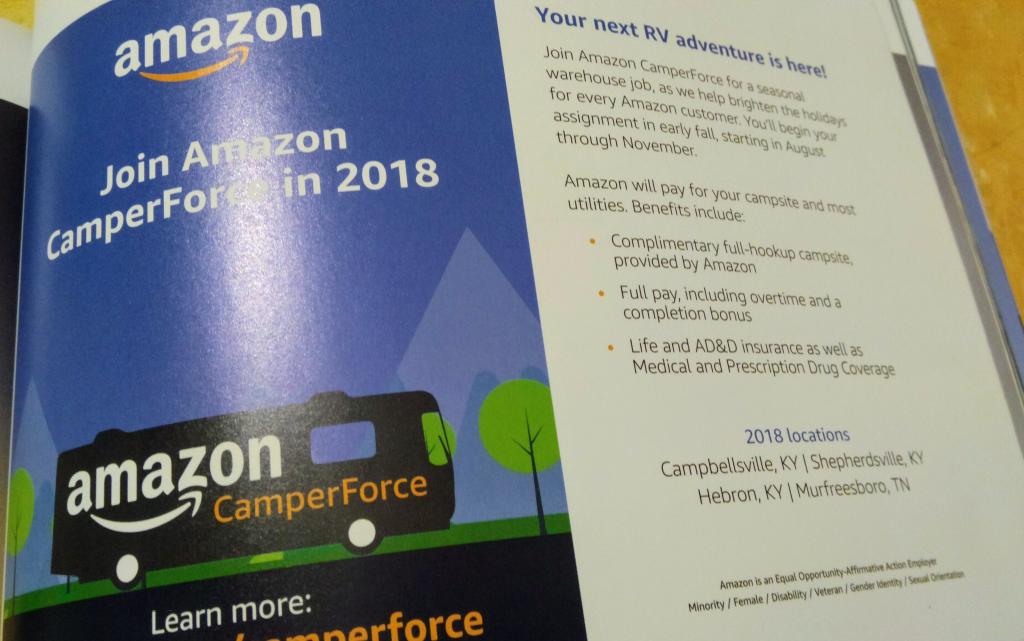

Spotted in Rova magazine, which caters to the #vanlife crowd, an ad for Amazon CamperForce, a recruiting program for people who live in their campers to come and work for Amazon from August to Christmas while living at an Amazon-sponsored campsite.

I am

I am