Thanks to this very helpful comment, I’ve found that my local OPAC speaks XML. And not just for search results, but for every page in the OPAC! This is truly amazing, and will enable some fantastic tools. Kudos to Dynix and the Provincial Library; I’ll modify the script I created earlier today to take advantage of this capability shortly.

All of this library fun reminded me of my first foray into the world of PEI, libraries and the Internet.

When we first arrived in Prince Edward Island in the early 1990s, the Provincial Library was automated with a custom-designed, mainframe-based system. As any librarian who was there back in the day will attest, it was an evil beast of a system; if I recall correctly, for example, I don’t believe there was any way to search by author or title. I was once told the process for bringing a new book into the system: it involved a complicated set of operations, some of them in Charlottetown (where the mainframe was) and some of them in Morell (where the Provincial Library is headquartered; the victim of an since-aborted plan to decentralize government operations that also saw the road-sign shop move to Tignish and Vital Statistics to Montague).

Needless to say, the system was neither public (in the branches) nor online.

When I started working with the province on their website, I brought up the idea of making the library catalogue searchable on the provincial website, and by some miracle was able to convince the mainframe operators to export the entire list of holdings (about 400,000 records) as an ASCII text file. I use the word “export” here loosely, as the mainframe had no “export” feature per se and they actually had to “print to a file” to get me what I needed.

The state of Linux database technology at the time was very primitive — this was before MySQL, PostreSQL and the like. If you wanted to have a Linux-based webserver use databases, you had to roll your own (early versions of the Vistors Guide search, for example, used Berkeley DB and Perl.

As a result, making the library catalogue searchable required a hand-stitched system. What I ended up with was a Perl wrapper around grep, a general-purpose UNIX text search tool. Users would enter a keyword, we would grep the 400,000-line ASCII file, and display the results. Because the file was somewhat structured, we could even provide automatic hyperlinks to other works by the same author, other books on the same subject and so on.

I can’t recall how long this system was in place — it was eventually replaced by the current web-based OPAC with the Provincial Library converted to Dynix — but it fulfilled a useful role for a while. Those were the days.

While the Provincial Library web OPAC does allow for a search by ISBN — on its advanced search page — there’s no easy way to link directly to a particular book from another website simply by its ISBN.

So I created one.

If you go to a URL in the following form:

http://ruk.ca/isbn/XXXXXXXXXX

Where you replace XXXXXXXXXX with the actual ISBN you’re linking to, you’ll automatically get redirected to the record for that ISBN (if there is one) in the PEI Provincial Library web OPAC.

Here’s an example:

http://ruk.ca/isbn/0596003838

That’s a link directly to the record for Content Syndication with RSS by Ben Hammersley.

Note that this will obviously only be of value if there actually is an item for the ISBN in question in the library’s collection. Note as well that it looks like the library only started entering ISBNs into their OPAC recently, so this is missing for earlier items.

Following from this script to create an RSS feed of items checked out of the library, I’ve created another little tool: dvd2rss.pl is a Perl script to extract a list of DVDs from the Provincial Library OPAC, sorted so that the newest DVDs are listed first.

Like the earlier script, this one is designed to work with the web-based Dynix OPAC (and is probably to some degree dependent on the way the PEI catalogue works, and how results are rendered). It should be easy, however, to modify it to work with other Dynix installations, and to display other sorts of searches (new books, new kids books, etc.).

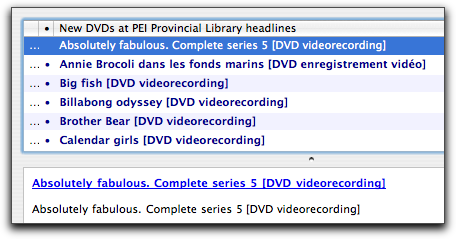

Here is the RSS feed that the script generates. I’d like to enhance it a little to pull the description of the DVDs from the OPAC too. As it is, though, each RSS item links to the DVD in question in the catalogue, so you can easily check its status, and put it on hold if you like.

Here’s what the result looks like in NetNewsWire:

A reminder that if you pay your Prince Edward Island property tax in installments, there’s one due on August 31, 2004 (tomorrow).

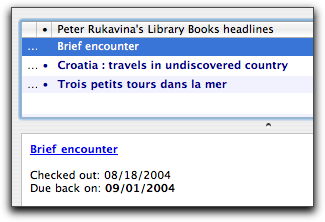

I am always forgetting when my library books are due. The PEI Provincial Library sends out handy email reminders, and they have a website that lists checked out items, but I need more (and more obvious) prodding.

The result is opac2rss.pl, a Perl script that automatically connects to the web-based Dynix (aka Epixtech) OPAC and grabs a list of the items I’ve got checked out and the date they are due. It then creates an RSS feed that I can read in my newsreader every morning.

The result looks like this:

The Alibi project in Nova Scotia was invaluable in getting this working, and the O’Reilly book Spidering Hacks was useful in understanding how to use the Perl HTML::TreeBuilder module.

Blogger reports 40 people on Prince Edward Island using their service. Most of them I’ve never seen. Neato.

Here is a page describing the archive of the sediment data my father gathered between 1968 and 2001 (aka “his life’s work”).

Prince Edward Island, like many jurisdictions, has a government-supported program of providing public Internet terminals to its citizens. Here in PEI this is called the Community Access Program, and it involves 76 locations across the Island that offer a variety of services, including, at the very minumum, one public Internet terminal.

As I’d never been to a so-call “CAP site,” I thought I should visit one, so I went up the road to the Atlantic Technology Centre, which opened this year, and has just expanded and improved its facilities.

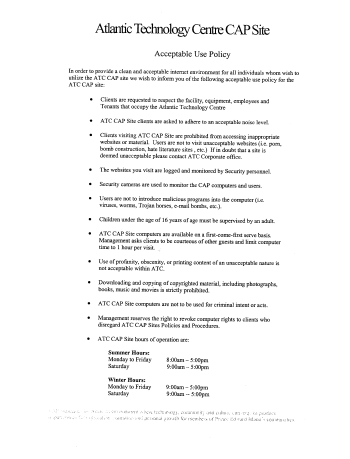

One of the first things I noticed was their Acceptable Use Policy, which is posted in front of every terminal. I took one down and asked for a photocopy of it, and the security guard at the front desk helpfully provided one for me (click for a larger image):

Now I must admit, before going any further, that in my heart I am a "let anyone do anything as long as it doesn't harm anyone else" kind of person. I don't believe in limits to expression. I don't believe in censorship. I don't believe in monitoring. So take everything I say with that in mind.

That said, I don’t have a fundamental problem with the notion of an Acceptable Use Policy inasmuch as a corporate entity needs to protect itself from liability. But I think the Technology Centre’s Acceptable Use Policy goes much further than that, and it does it in an annoyingly amateur fashion that results not only in setting up a draconian environment, but a foggy one at that.

It starts off slow: respect the facility, the people and the equipment and keep the noise nown. I’ve got no problem with that.

Then things heat up:

Clients visiting ATC CAP Site are prohibited from accessing inappropriate websites or material. Users are not to visit unacceptable websites (i.e. porn, bomb construction, hate literature, etc.) If in doubt that a site is deemed inappropriate please contact ATC Corporate office.

Now even if you’re willing to set aside that there is a difference between expression about something and doing something (i.e. websites about building bombs vs. actually building bombs), the guideline about what’s “inappropriate” isn’t a technical legal definition. They’ve set up a situation where “inappropriate” is, in essence “whatever we say is inappropriate.”

That’s wrong. Not only do I find this approach personally objectionable, but I would argue that it is in contravention of the Canadian Charter of Rights and Freedoms that says, in part, that “Everyone has the following fundamental freedoms:”

- freedom of conscience and religion;

- freedom of thought, belief, opinion and expression, including freedom of the press and other media of communication;

- freedom of peaceful assembly; and

- freedom of association.

Further, as the mission statement of the Canadian Library Association says:

We believe that libraries and the principles of intellectual freedom and free universal access to information are key components of an open and democratic society.

I agree. And I think “access only to that information we deem acceptable” isn’t the same as “free universal access.”

The next two sections of the Acceptable Use Policy are the “hammer” they use to enforce the policy:

The websites you visit are logged and monitored by Security personnel.

Security cameras are used to monitor the CAP computers and users.

That seems just plain draconian to me: I can’t imagine how it’s possible for anyone to freely and openly explore the world of the Internet with an electronic watchdog literally looking over their shoulder. It’s a violation of privacy, and unnecessary limit to freedom, and combined with the earlier “we’ll decide what’s appropriate” clause, it sets up a dynamic where surfing the web becomes an intellectual minefield.

The requirement that users not install viruses, worms, etc. is reasonable (although it might usefully be accompanied by a warning that this doesn’t mean the public terminals are necessarily free of these).

The limitation that children under the age of 16 must be “supervised by an adult” seems wrongheaded. I think one of the most useful aspects of the Internet is the access it affords to older children and teenagers to information that they would have difficulty — perceived or actual — getting access to otherwise. A teen who wants information about birth control. Or the effects of drugs. Or communism. A more reasonable approach — and one adopted by the provincial library system — is that parents must give one-time initial consent for children under 15, after which they are allowed free, unaccompanied access.

The “first come, first served” policy seems reasonable.

The “Use of profanity, obscenity, or printing content of an unacceptable nature is no acceptable with ATC” suffers from the same problems as the prohibition against “inappropriate websites or material,” namely the lack of a definition of “unacceptable material.”

The statement that “Downloading and copying of copyrighted material, including photographs, books, music and movies is strictly prohibited” is the blanket paranoid “you can’t run Kazaa” clause. Leaving aside that the computers at the CAP site afford no access to floppy, CD, USB or any other way of “downloading and copying,” a statement like this demands considerable elaboration. For example, there is considerable content on the Internet that, even though it is “copyrighted,” is freely available for personal use. This website, for example, is subject to this license, which allows copying.

Indeed it might be more appropriate to simply let the following clause, “ATC CAP Site computers are not to be used for criminal intent or acts,” stand, and leave out the copyright clause, for at least the prohibition against criminal acts has a dispute settlement mechanism, and leaves the responsibility with the user of the computer for determining the appropriateness of their actions.

Why is all of this important?

Because the Community Access Program is the cornerstone of the provincial and federal governments’ program to ensure the closing of the so-called “digital divide” and the universal access to the power of the Internet. Mitch Murphy, at the time the Minister of Technology, put it this way in 2000:

CAP sites enable communities across Prince Edward Island to take advantage of the Internet and other information technologies as a tool for social, cultural and economic development.

When CAP sites are cloaked in unacceptable Acceptable Use Policies, though, government is creating another sort of digital divide: between the people with the resources to freely browse the Internet from their home, without video camera monitoring, security personnel logging and arbitrary content restrictions, and those without their own means, who are left to browse in public under a sort of digital house arrest.

This has to change.

What are the chances that Aliant, after operating without its employees for these many months, will simply decide to do without employees altogether? Could they simply decide that it’s better to offer poor (or no) service, without the “hassle” of actual employees, in perpetuity?

I am

I am