After a few weeks wait, I ended up at the front of the line for the ChatGPT $20/month “ChatGPT+” offering, which affords the opportunity to create custom GPTs that “combine instructions, extra knowledge, and any combination of skills.”

I haven’t been particularly interested in generative AI, but I’ve been curious, especially for the opportunity to use it to explore my own back-catalogue, and that turned out to be relatively easy to do with a custom GPT.

This blog is maintained, for reasons of procrastination, in Drupal 7. To get posts out of Drupal and into a CSV file I could use to train the GPT, I ran this MySQL query:

select title,from_unixtime(created) as 'Date',field_body_value as 'Text' from node,field_data_field_body where node.nid = field_data_field_body.entity_id order by created

That gave me a CSV file with the title, date, and body of each post. I uploaded that to the GPT, and then told it:

I’ve uploaded a 24 year archive of the blog posts. Each post has a title, creation date, and the body of the blog post. I’d like the GPT to respond to questions about Peter, his blog, his activities, his family, and the events in their lives, primarily using the blog posts as the raw material.

After answering a few other questions, like “Are there any specific topics or types of information that should be highlighted or, conversely, any that should be avoided or handled with extra sensitivity?”, the GPT was ready to take for a ride.

I started off easy:

Peter: How many blog posts has Peter written?

GPT: Peter Rukavina has written a total of 10,755 blog posts.

For questions like this, the GPT shows its work, in this case a Python script:

import pandas as pd # Load the CSV file containing Peter Rukavina's blog post data file_path = '/mnt/data/Untitled.csv' blog_data = pd.read_csv(file_path) # Count the number of blog posts total_posts = blog_data.shape[0] total_posts

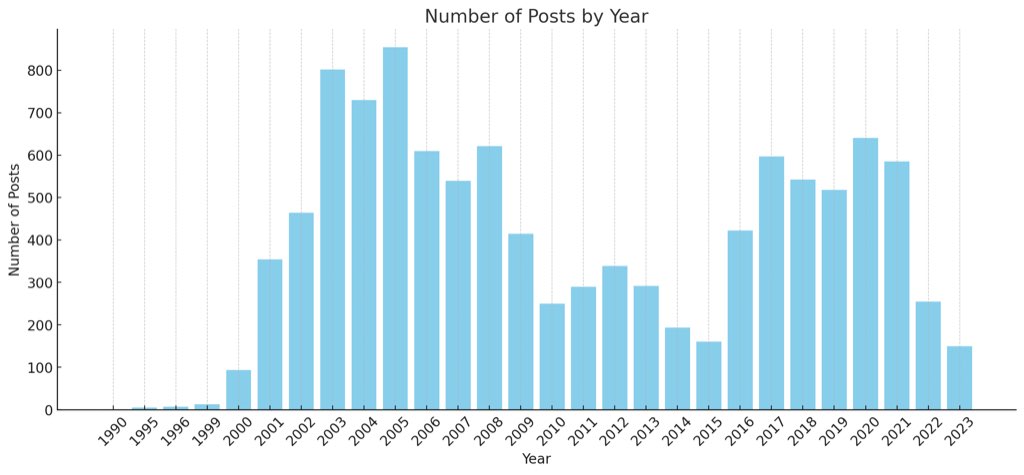

Next, I asked for a year by year count of posts, and the GPT returned:

1990: 1 post

1995: 5 posts

1996: 7 posts

1999: 13 posts

2000: 94 posts

2001: 355 posts

2002: 464 posts

2003: 802 posts

2004: 730 posts

2005: 854 posts

2006: 610 posts

2007: 540 posts

2008: 622 posts

2009: 415 posts

2010: 250 posts

2011: 290 posts

2012: 339 posts

2013: 292 posts

2014: 194 posts

2015: 161 posts

2016: 423 posts

2017: 597 posts

2018: 543 posts

2019: 519 posts

2020: 641 posts

2021: 586 posts

2022: 255 posts

2023 (up to the date of the last post in the data): 150 posts

I asked “Please display that as a bar chart” and the GPT returned:

I was curious to know more about when I write, during the week, so I asked:

Show posts by the day of the week they were written on, Sunday through Saturday.

and the GPT replied:

Sunday: 1,332 posts

Monday: 1,679 posts

Tuesday: 1,634 posts

Wednesday: 1,682 posts

Thursday: 1,618 posts

Friday: 1,568 posts

Saturday: 1,239 posts

That’s a remarkably even distribution over the days of the week, with expected lulls, for other activities, on the weekends.

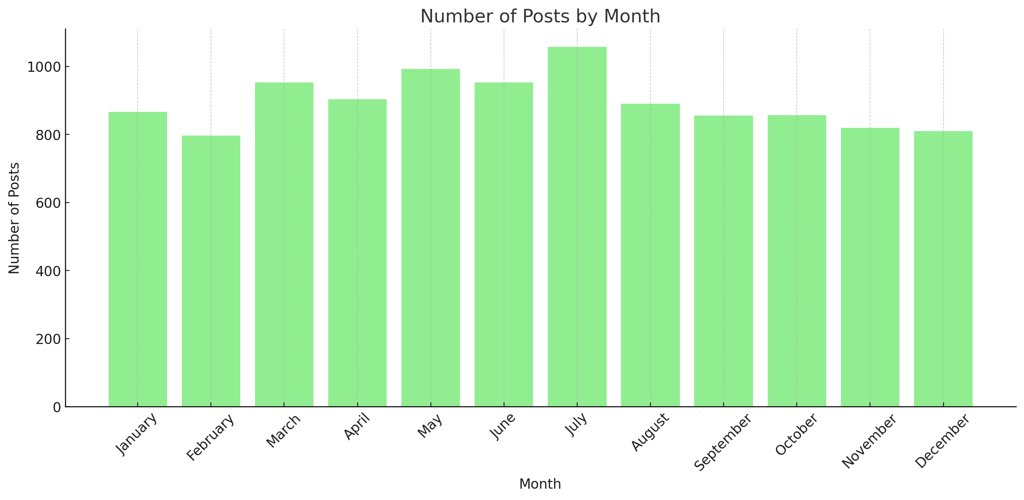

I figured I might as well ask the same thing about the months of the year:

Show posts by the month of the year.

And the answer:

January: 866 posts

February: 797 posts

March: 953 posts

April: 903 posts

May: 992 posts

June: 953 posts

July: 1,057 posts

August: 890 posts

September: 855 posts

October: 857 posts

November: 819 posts

December: 810 posts

Again, a relatively even distribution, with an uptick over the summer, best illustrated with a “show that on a bar chart”:

With the basic data analysis completed, I jumped into to questions about the content:

Peter: When was Peter’s child born?

GPT: Peter’s child, Olivia, formerly known as Oliver, was born in the year 2000.

That’s right.

Peter: Where was Peter’s grandfather Dan born?

GPT: Peter’s grandfather, Dan, was born in Peterborough, Ontario, Canada.

That’s wrong (he was born in Croatia). I told the GPT that:

Peter: That’s wrong. He was born in Kjonsko Brdo, Croatia.

GPT: Thank you for the correction. Peter’s grandfather Dan was born in Kjonsko Brdo, Croatia. If there are more details or questions you have, feel free to ask!

I then asked:

Peter: Where was Peter’s father born?

GPT: Peter’s father was born in Kjonsko Brdo, Croatia.

Also wrong. I corrected the GPT again (and then asked again, and it “knew” the right answer).

Peter: When did Peter meet Lisa?

GPT: Peter met Lisa in the spring of 2020.

Wrong.

Peter: What was the name of the summer CBC Radio series Peter created?

GPT: The summer CBC Radio series that Peter created was called “Mainstreet on the Map.”

That seems like a flat-out hallucination. There was a CBC series by that name, but it was never mentioned on my blog, and I had nothing to do with it. I pressed the GPT:

Peter: Try that again. Try harder.

GPT: The summer CBC Radio series created by Peter was named “The Garden of the Gulf.”

That’s another hallucination. I decided to offer hints:

Peter: That’s wrong. Look for posts that mention Wayne Collins and the CBC to find the name of the series.

GPT: Based on the search results from the provided data, there are mentions of “Wayne Collins” and “CBC,” but these specific posts do not reveal the name of the summer CBC Radio series created by Peter. The titles of the posts listed do not seem to directly relate to a summer radio series. Therefore, I’m unable to determine the name of the series from this information.

The GPT did not take the hint, despite this post containing the sentence:

Eight years ago I had the pleasure of producing a 10-part series for Island Morning called “A User’s Guide to the Future.”

I tried to press on, but I ran into my ChatGPT+ limit:

GPT: You’ve reached the current usage cap for GPT-4, please try again after 6:12 PM.

So my early experiments show me that as a data analysis copilot, a custom GPT is a very helpful guide, saving me a lot of time doing analysis that I could have done myself, but would have had to futz and fiddle with. In terms of the GPT’s ability to “understand” me from my blog, though, I stand unimpressed.

I’ll continue to chat and see what I can find out about myself. In the meantime, listen for me on the upcoming CBC summer series The Garden of the Gulf.

I am

I am

Comments

This matches my experience:

This matches my experience: amazing and often wrong.

Were you able to be confident in the stats because it showed work?

I find myself assuming everything could be wrong.

Interesting experiment. When

Interesting experiment. When I first used the version of GPT out last January about myself, apparently I had died in 2020 (still living!--it apologized when I corrected it) and UVM (where I have never taught) set up an endowed scholarship in my name for students in Environmental Science (not my field). Looking forward to your radio show.

Echoes my experience:

Echoes my experience: impressive and often wrong.

Did the show-your-work aspect give you enough confidence to trust the stats? I find myself assuming anything it tells me could be wrong.

Like Steven I wonder whether

Like Steven I wonder whether the data analysis is correct, or just like the rest merely plausible looking. Esp the day of the week thing, as that wasn't in the data, and I wouldn't expect GPT to determine all weekdays for posts in the process of answering your prompt.

I am interested in doing what you did, but then with 25 years of notes and annotations. To have a chat about my interests and links between things. Unlike the fact based questions you've asked the tool that doesn't necessarily need it to be correct, just plausible enough to surface associations. My experience and assumption is that it's not a search replacement.

Also makes me think, what's Wolfram Alpha doing these days, as they are all about interpreting questions and then giving the answer directly.

Have you asked it things based more on association? Like "based on the posts ingested what would be likely new interests for Peter to explore" e.g.? Can you use it to create new associations, help you generate new ideas in line with your writing/interests/activities shown in the posts?

I asked the GPT exactly that,

I asked the GPT exactly that, Ton (“Based on the posts ingested what would be likely new interests for Peter to explore?”). Its reply:

(This is, I suspect, because the GPT doesn’t like the HTML in the posts). It continued:

And then:

And then the GPT gave up.

I asked the GPT to try again,

I asked the GPT to try again, and here’s what it came up with:

This seems simple-minded, and like something a 1990-era text analyzer could do.

> Esp the day of the week

A large-language model hallucinating is always a possibility, however, calculating day of the week for a given date is fairly easy. It's probably one of the less time-consuming things an LLM-based "AI" service does - for starters, it's much easier than parsing a natural-language question.

To put a number to it, I

To put a number to it, I wrote a Python script to generate 11,000 random dates between 2000 and 2023 and determine their days-of-week. On my mediocre-specs-for-2018 computer it ran in 171 milliseconds.

It’s worth noting that the

It’s worth noting that the “math” part of this — the figuring out which posts were written on which days — isn’t “AI” in the sense of AI being a magic black box. The “AI” here is the GPT understanding my problem statement, and writing a small computer program (in Python, in this case) to solve the problem. If the result is wrong, it’s because the AI wrote a buggy program.

For my use case, I would fact

For my use case, I would fact-check every answer, but I didn't find any errors. I also tried feeding it my writing and then asked it to write more about this character in the same style, and it failed. Which I expected. I was astounded at the accuracy of their latest text to speech. I fed it a story and those around the table were wondering who the new VO artist was.

Maybe ask your collective

Maybe ask your collective readership to suggest "ideas for Peter to explore."

"Peter might consider

"Peter might consider exploring more ways in which Chat GPT could help people understand their lives and areas they could pursue to make their lives more meaningful."

Ask it to "identify five

Ask it to "identify five significant life events and then create an image for each in the style of XXXXX" - you choose the style, it can be a favorite artist, a piece of work, etc.

Based on the hint didn’t work

Based on the hint didn’t work, the program seems to have considered “summer” essential, which I guess is related to word order, and anyway makes me feel a little sympathy for it, because I know even people sometimes misinterpret such constructions, which in speech work better because of the relative emphasis we give to different words in the string. Emphasizing differently can change the meaning, yet as text we sometimes provide no emphasis at all, relying on context to resolve the ambiguities.

Add new comment