The Venn of “links in Robin Sloan’s email newsletters” and “things that interest me” is incredibly high. I noticed this especially with the April Edition: I read the newsletter in Readwise Reader, and I was adding almost every link to the queue for reading later in Reader.

So I decided that I needed a Robin Sloan Machine: a bit of code that would take all the links in the public URL for the newsletter and add them to Reader for later reading.

My ask to ChatGPT:

I have a URL that I want to extract all the links from, and then feed each link to the Readwise Reader API, adding each URL, with title, and tags of my choosing, as Readwise items. Please provide me with a PHP script that will do that. Make the original URL an argument for the script, and make the tag(s) an argument to the script, so that I can pass these as parameters and feed other URLs in a similar way.

The first pass at this was fully functional. I tinkered a little in subsequent prompts.

Anticipating that I might want to call this as an API endpoint on my own server at some point:

Please modify the PHP script so that it will accept EITHER parameters passed on the command link, OR the URL query parameters “url” and “tags.”

Tweaking the Reader API to clean up the HTML, and realizing that my script doesn’t need to figure out the title, as Reader will do that itself:

Modify the script so that it doesn’t need to pass the “Title” parameter to the Readwise API — the API will calculate this on its own when it reads the URL. Also, add the API parameter “should_clean_html” when calling the Readwise Reader API, and set it to “true”.

And, finally:

Modify the script so that multiple links found that are different only because they have a hash tag at the end are not sent — just send ONE to Readwise. And, finally, add code to pay attention to the note in the API about rate limiting.

Here’s the final script:

<?php

$isCli = php_sapi_name() === 'cli';

if ($isCli) {

if ($argc < 3) {

echo "Usage: php index.php <source_url> <tag1,tag2,...>\n";

exit(1);

}

$sourceUrl = $argv[1];

$tagsInput = $argv[2];

} else {

if (!isset($_GET['url']) || !isset($_GET['tags'])) {

header("HTTP/1.1 400 Bad Request");

echo "Missing 'url' or 'tags' query parameter.";

exit;

}

$sourceUrl = $_GET['url'];

$tagsInput = $_GET['tags'];

}

$tags = array_map('trim', explode(',', $tagsInput));

$readwiseToken = 'YOUR_READWISE_API_TOKEN'; // Replace with your actual token

function fetchHtml($url) {

$context = stream_context_create([

'http' => ['header' => "User-Agent: PHP script\r\n"]

]);

return @file_get_contents($url, false, $context);

}

function normalizeUrl($url) {

$parsed = parse_url($url);

if (!$parsed) return $url;

// Remove fragment

unset($parsed['fragment']);

// Rebuild URL without fragment

$normalized = '';

if (isset($parsed['scheme'])) $normalized .= $parsed['scheme'] . '://';

if (isset($parsed['user'])) {

$normalized .= $parsed['user'];

if (isset($parsed['pass'])) $normalized .= ':' . $parsed['pass'];

$normalized .= '@';

}

if (isset($parsed['host'])) $normalized .= $parsed['host'];

if (isset($parsed['port'])) $normalized .= ':' . $parsed['port'];

if (isset($parsed['path'])) $normalized .= $parsed['path'];

if (isset($parsed['query'])) $normalized .= '?' . $parsed['query'];

return $normalized;

}

function extractLinks($html, $baseUrl) {

libxml_use_internal_errors(true);

$dom = new DOMDocument;

$dom->loadHTML($html);

$xpath = new DOMXPath($dom);

$links = [];

foreach ($xpath->query('//a[@href]') as $node) {

$href = trim($node->getAttribute('href'));

if (!$href || strpos($href, 'javascript:') === 0) continue;

// Convert to absolute URL if needed

if (parse_url($href, PHP_URL_SCHEME) === null) {

$href = rtrim($baseUrl, '/') . '/' . ltrim($href, '/');

}

$normalized = normalizeUrl($href);

$links[$normalized] = true; // use keys for uniqueness

}

return array_keys($links);

}

function sendToReadwise($url, $tags, $token) {

$payload = [

'url' => $url,

'tags' => $tags,

'should_clean_html' => true

];

$ch = curl_init('https://readwise.io/api/v3/save/');

curl_setopt($ch, CURLOPT_RETURNTRANSFER, true);

curl_setopt($ch, CURLOPT_HEADER, true);

curl_setopt($ch, CURLOPT_HTTPHEADER, [

'Authorization: Token ' . $token,

'Content-Type: application/json'

]);

curl_setopt($ch, CURLOPT_POSTFIELDS, json_encode($payload));

$response = curl_exec($ch);

$headerSize = curl_getinfo($ch, CURLINFO_HEADER_SIZE);

$header = substr($response, 0, $headerSize);

$body = substr($response, $headerSize);

$httpCode = curl_getinfo($ch, CURLINFO_HTTP_CODE);

if (curl_errno($ch)) {

echo "cURL error for $url: " . curl_error($ch) . "\n";

} elseif ($httpCode == 429) {

echo "Rate limit hit for $url. ";

preg_match('/Retry-After: (\d+)/i', $header, $matches);

$retryAfter = isset($matches[1]) ? (int)$matches[1] : 60;

echo "Waiting for $retryAfter seconds...\n";

sleep($retryAfter);

sendToReadwise($url, $tags, $token); // Retry

} elseif ($httpCode >= 200 && $httpCode < 300) {

echo "Sent: $url\n";

} else {

echo "Failed to send $url. HTTP $httpCode\n";

echo $body . "\n";

}

curl_close($ch);

}

// MAIN

$html = fetchHtml($sourceUrl);

if (!$html) {

echo "Failed to fetch source URL: $sourceUrl\n";

exit(1);

}

$links = extractLinks($html, $sourceUrl);

echo "Found " . count($links) . " unique normalized links on $sourceUrl\n";

foreach ($links as $link) {

sendToReadwise($link, $tags, $readwiseToken);

sleep(1); // basic pacing

}I ran this on my Mac with:

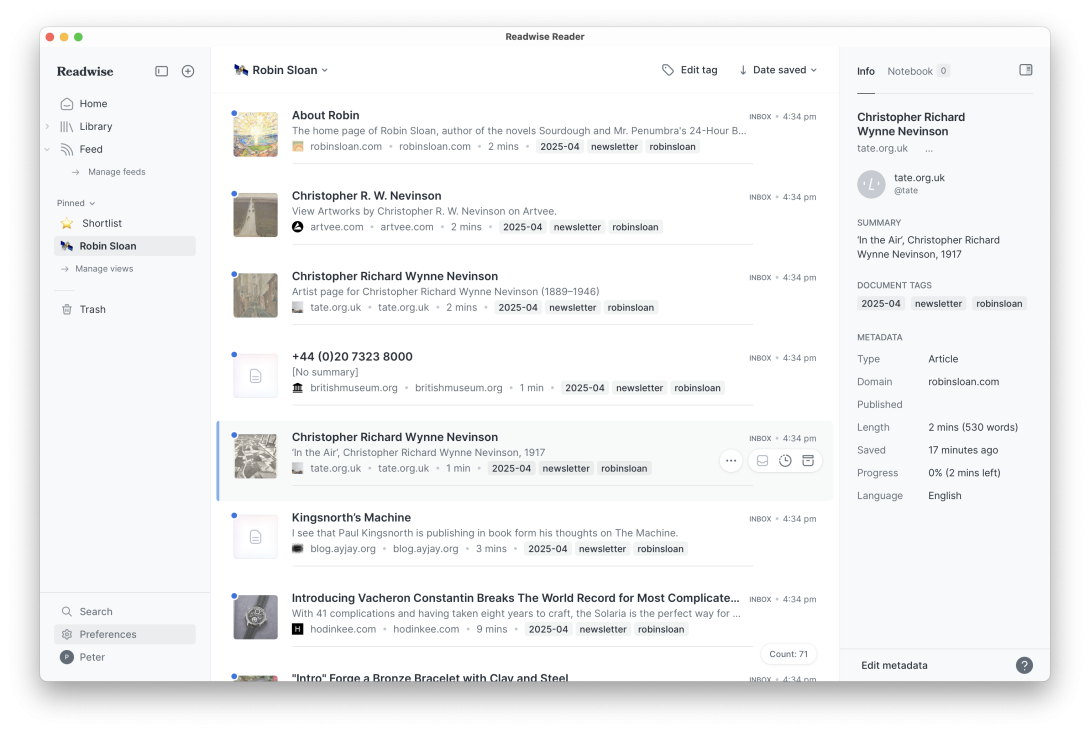

php index.php https://www.robinsloan.com/newsletters/golden-sardine/ "robinsloan,newsletter,2025-04"I set it to run, and ended up with 85 new links in my Reader for browsing. I created a View in Reader to segregate them into their own handy area (tag:robinsloan):

From start to finish this took about 35 minutes to create and refine. It would have been possible without ChatGPT sitting on my shoulder whispering code in my ear, but it would have taken a lot longer.

I am

I am

Comments

I love this. I recognise…

I love this. I recognise that ChatGPT has a lot of shortcomings when it comes to accuracy or pandering, but it has really unlocked things for me in terms of creating little Python scripts, or telling me how to do quick little commands on the Linux command line. It's been an enabler. In the past I've struggled to google the specific thing I'm trying to do and not knowing how to adapt it to my needs. This way it does pretty much what I want, it often adds inline comments to the code so I can understand a bit bettter what's happening, and then much like how I learned HTML 20+ years ago, I can start to 'read' the code blocks and figure out what is doing what.

Add new comment